In terms of enterprise SEO optimization, the technical foundation is just as important as content quality. Large websites with hundreds of thousands of pages often face crawlability and indexing challenges that directly affect search visibility. Google reported that over 60% of enterprise-level sites encounter crawling inefficiencies, which waste crawl budget and limit ranking opportunities.

Unlike small business sites that may rely on quick fixes, enterprises require scalable solutions, solid site architecture, and the expertise of enterprise SEO services to ensure significant pages are reachable, searchable, and fully optimized for search engines.

This blog covers the key site architecture tips that improve crawlability, reduce wasted resources, and boost the ranking potential of large-scale websites.

Why Site Architecture Matters in Enterprise SEO Optimization

In enterprise SEO optimization, visibility and growth rely heavily on-site architecture. It not only dictates how search engines crawl and index content but also shapes how users navigate through the site. Poorly designed architecture creates crawlability issues that directly affect rankings, traffic, and conversions.

For websites with thousands of pages, weak structures lead to crawl budget waste, orphaned content, and inefficient distribution of authority. Botify studies reveal that nearly half of enterprise web content is never indexed by Google due to faulty architecture.

This proves why architecture cannot be treated as an afterthought. Partnering with an enterprise SEO agency or leveraging enterprise SEO optimization services ensures scalable fixes such as optimized URL structures, better internal linking, and reduced crawl depth, so high-value content remains discoverable. Without this base, even the best content strategy fails.

Key Architecture Challenges in Enterprise SEO Optimization:

- Orphan pages – Important content that search engines never find.

- Excessive crawl depth – Critical pages buried more than 5 clicks deep.

- Duplicate URLs – Crawl budget wasted on similar or duplicate content.

- Low internal connectivity – Weak linking between important site areas.

With the help of enterprise SEO optimization services, organizations can overcome these challenges, protect crawlability, and establish a long-term growth trajectory.

Key Site Architecture Tips to Improve Crawlability

To strengthen enterprise SEO optimization, businesses need to focus on practical architectural improvements that directly impact crawlability and indexing. Below are the key strategies that can help large websites scale visibility effectively.

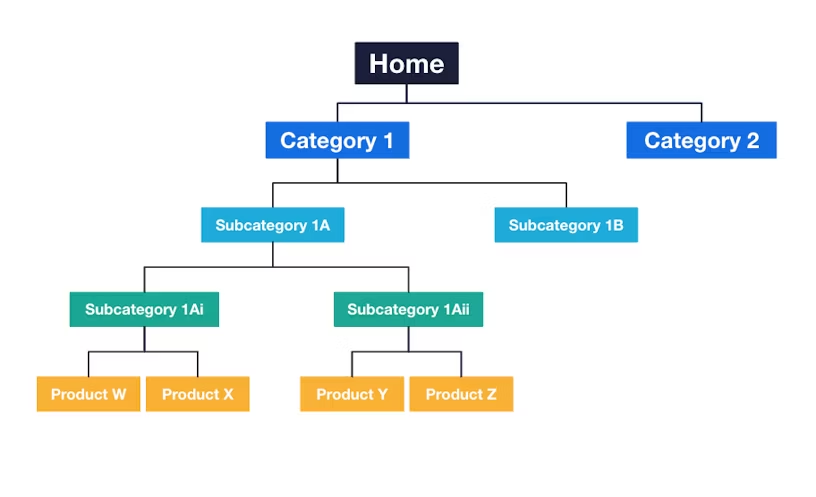

1. Keep Your Crawl Depth Shallow

Googlebot prioritizes pages closer to the homepage. When important pages require more than 3–4 clicks to access, crawl efficiency drops.

- Keep high-value pages (categories, products, services) within 2–3 clicks.

- Use breadcrumbs to strengthen hierarchy and improve discoverability.

- Avoid burying critical content deep in the structure.

Stat to remember: Sites with shallow hierarchies achieve 35% higher indexing rates than those with deep structures (Searchmetrics, 2024).

2. Strengthen Internal Linking

Internal linking guides search engines through your site and helps distribute authority. Without it, crawlers may not understand relationships between pages.

Best practices include:

- Linking high-authority pages (homepage, top categories) to new or priority pages.

- Using keyword-rich anchor text naturally (without over-optimization).

- Building content hubs (blogs, guides) that link back to product/service pages.

Pro tip: Many enterprises scale this using automated linking solutions offered by enterprise SEO services, reducing manual effort.

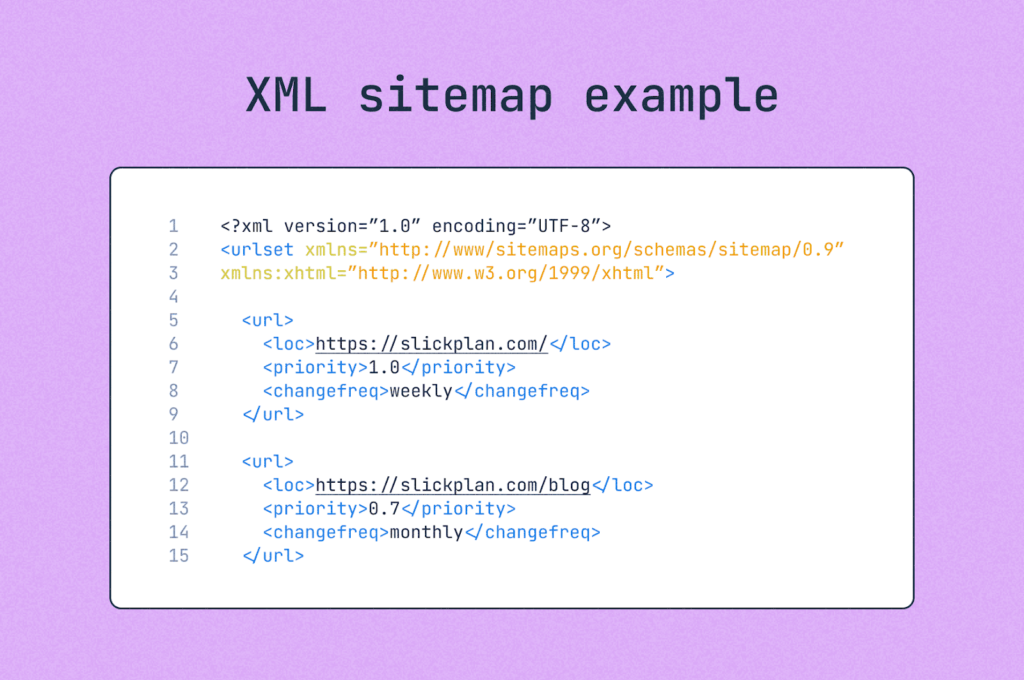

3. Use XML Sitemaps Effectively

XML sitemaps guide search engines to important URLs, which is crucial for enterprise-scale websites.

- Keep each sitemap clean and under 50,000 URLs.

- Use multiple sitemaps for very large sites (news, products, services).

- Exclude noindex, redirected, or duplicate URLs.

Fact: Google confirms that sitemap freshness affects crawl priority. Sites with updated sitemaps see a 28% higher rate of new content discovery.

4. Optimize URL Structures

Clean, descriptive URLs improve both crawlability and user experience.

- Use short, keyword-rich slugs (e.g., /enterprise-seo-services instead of /services?id=1234).

- Keep URL structures consistent with site hierarchy.

- Avoid unnecessary parameters and session IDs.

Well-optimized URLs help search engines and users quickly understand content relationships.

5. Manage Duplicate Content Issues

Large websites often suffer from duplication due to faceted navigation, session IDs, or product variations.

Fixes include:

- Implementing canonical tags for preferred versions.

- Blocking duplicate parameters in robots.txt.

- Consolidating related content to strengthen authority.

Stat: According to SEMrush’s 2024 Site Audit Report, 20% of crawl budgets on enterprise sites are wasted on duplicate content.

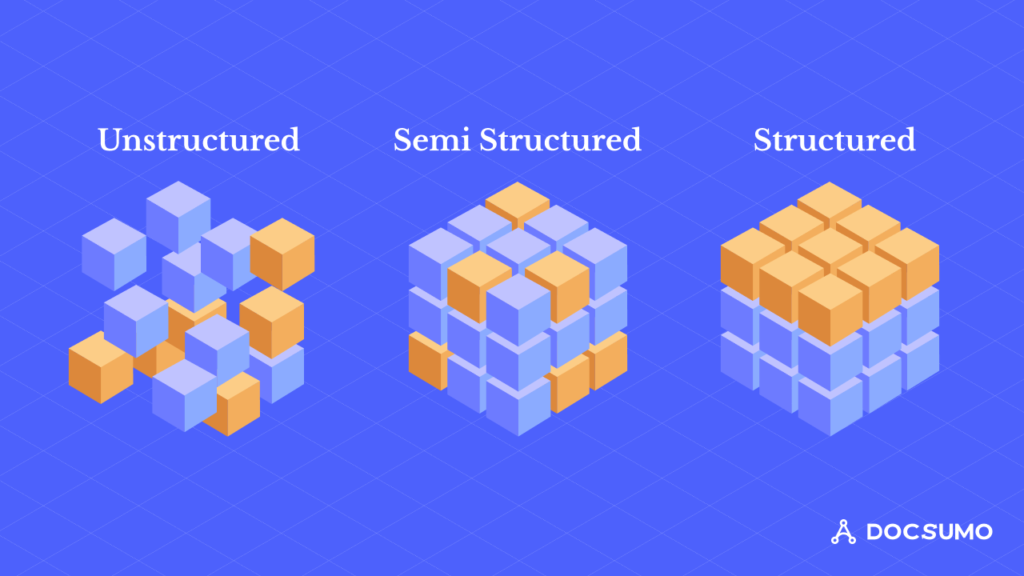

6. Leverage Structured Data and Schema

Schema markup provides search engines with context, improving crawlability and visibility in SERPs.

- Implement schema for products, FAQs, breadcrumbs, reviews, and services.

- Validate implementation with Google’s Rich Results Test.

- Apply schema at scale via templates for enterprise-level consistency.

Many enterprise SEO optimization providers automate schema deployment across thousands of pages.

7. Prioritize Mobile-First Architecture

With mobile-first indexing, Google primarily crawls mobile versions of websites. Statista (2025) reports that 63% of all searches occur on mobile devices.

Key steps:

- Use responsive designs with scalable navigation menus.

- Compress assets for faster mobile load times.

- Test regularly with Google’s Mobile-Friendly Tool.

Mobile-first design enhances user experience and improves search engine rankings.

8. Implement Crawl Budget Management

For enterprise sites with millions of URLs, crawl budget management is critical.

Strategies include:

- Blocking low-value pages (thank-you pages, filters).

- Using no-index tags strategically.

- Managing pagination with rel=next/prev or infinite scroll best practices.

Google’s John Mueller confirmed that crawl budget directly impacts indexing for large sites, making it a top priority in enterprise SEO optimization.

9. Invest in Log File Analysis

Log file analysis shows the real-time interaction of crawlers with your site.

Benefits include:

- Identifies crawl traps (infinite loops, fixity points).

- Displays pages that bots are not paying attention to.

- Determines wasted crawl budget.

Enterprise SEO agencies often include log file insights as part of a wider SEO approach, ensuring more effective crawl management.

10. Automate Technical SEO at Scale

It is not possible to manually fix metadata, redirects, or schema across 500K+ pages. Companies need to adopt automation.

Automation helps with:

- Large-scale updates of metadata and alt-text.

- Redirect mapping after site migrations.

- Schema implementation across thousands of URLs.

Collaborating with an enterprise SEO agency makes automation precise, scalable, and business-focused.

The Role of Enterprise SEO Services in Architecture Optimization

In the case of a large-scale website, site architecture is no longer just a technical issue but the basis of enterprise SEO optimization. While in-house teams may have the knowledge, they often lack the bandwidth or sophisticated systems required to handle thousands of URLs, complex taxonomies, and continual updates. This is where enterprise SEO services play a critical role.

An expert enterprise SEO agency applies the right blend of skills, processes, and automation to manage scale efficiently. Rather than relying on manual fixes that rarely scale, agencies use structured solutions to improve crawlability, authority flow, and crawl budget efficiency.

Stat: Companies that invest in professional enterprise SEO optimization services see a 32% increase in organic traffic within 12 months, compared to brands managing SEO in-house. Gains are also reflected in improved indexation, stronger keyword performance, and measurable revenue growth.

How Enterprise SEO Services Optimize Architecture:

Enterprise SEO services combine technical skills, structured processes, and automation to address complex site architecture challenges at scale.

Here’s how agencies typically optimize architecture for better crawlability and long-term growth:

- Technical expertise – Managing duplicate URLs, crawl depth, and JavaScript-heavy sites at scale.

- Custom workflows – Streamlined URL hierarchies, optimized internal linking, and crawl management.

- Automation tools – Metadata, schema markup, and redirects deployed across thousands of pages.

- ROI tracking – Connecting crawlability improvements directly to traffic and revenue outcomes.

By leveraging professional enterprise SEO services, organizations not only fix immediate architectural flaws but also build a scalable, search-friendly framework that drives long-term growth.

Conclusion

For enterprises, SEO optimization begins with strong site architecture. Without it, even the best content strategies and link-building campaigns will underperform. By improving crawl depth, strengthening internal linking, managing duplicate content, and automating technical fixes at scale, enterprises ensure every valuable page is discovered and indexed.

This is where partnering with an enterprise SEO agency makes a measurable impact. With the right enterprise SEO optimization services, businesses reduce crawl inefficiencies, improve mobile-first performance, and align with Google’s evolving ranking signals.

If you’re ready to scale growth, now is the time to act. A well-structured site architecture not only improves crawlability but also turns organic search into a sustainable revenue engine.

Contact our team today for a customized enterprise SEO audit and strategy roadmap.

FAQs – Enterprise SEO Optimization

Q1: Why is crawlability important for enterprise SEO optimization?

Crawlability ensures that search engines can efficiently discover, navigate, and index your website’s content. For enterprise websites with thousands or even millions of pages, poor crawlability wastes crawl budget and keeps important pages hidden. By improving crawlability, brands ensure faster indexing, higher visibility, and stronger rankings. Effective enterprise SEO optimization directly links crawlability improvements to organic growth.

Q2: How does site architecture impact SEO performance?

A strong site architecture creates a logical hierarchy that search engines and users can follow easily. It distributes link equity across pages, prevents orphan content, and reduces crawl depth so key pages aren’t buried. For enterprises, solid architecture improves discoverability and user experience, leading to better rankings and conversions.

Q3: What role does an enterprise SEO agency play in crawlability optimization?

An enterprise SEO agency brings technical expertise and scalable processes that in-house teams often lack. They manage crawl budgets, streamline URL structures, resolve duplicate content, and implement schema across large websites. Agencies also use automation tools to optimize metadata, redirects, and linking at scale.

Q4: What are common crawlability issues on enterprise sites?

Common issues include duplicate URLs, excessive crawl depth, broken internal links, and inefficient parameter handling. Inconsistent canonical tags and poor mobile-first design also reduce crawl efficiency. These problems waste crawl budgets and limit indexing of high-value pages, requiring structured fixes through enterprise SEO optimization services.

Q5: How often should enterprises audit site architecture?

Enterprises should conduct a full site architecture audit at least quarterly. With frequent product launches, updates, and redesigns, crawl issues can arise quickly. Many organizations also run monthly automated scans through enterprise SEO services to identify problems early and protect traffic.

Q6: Can structured data improve crawlability?

Yes. Structured data provides context that helps search engines interpret and index content more accurately. While schema doesn’t directly increase crawl frequency, it improves indexing precision and visibility in SERPs. Enterprises often deploy schema at scale across product pages, categories, and FAQs to strengthen enterprise SEO optimization.

Q7: How does the crawl budget affect large websites?

Search engines assign a crawl budget based on authority, site health, and frequency of updates. For enterprise sites with millions of URLs, a poorly managed crawl budget leads to wasted crawls on low-value pages. Optimizing crawl budget ensures priority pages are indexed quickly, improving rankings and visibility.

Leave a Reply