A recent study shows 46% of entrepreneurs believe AI could make or break their business within five years. Technical SEO AI optimization has become crucial since generative engines now pull from billions of pages. Your website’s technical foundation determines how AI search engines understand, index, and recommend your content to potential customers.

AI engines might skip your site when it lacks structured content or has poor crawl efficiency, favoring faster and more reliable sources instead. This matters especially when you have businesses seeking enterprise SEO services.

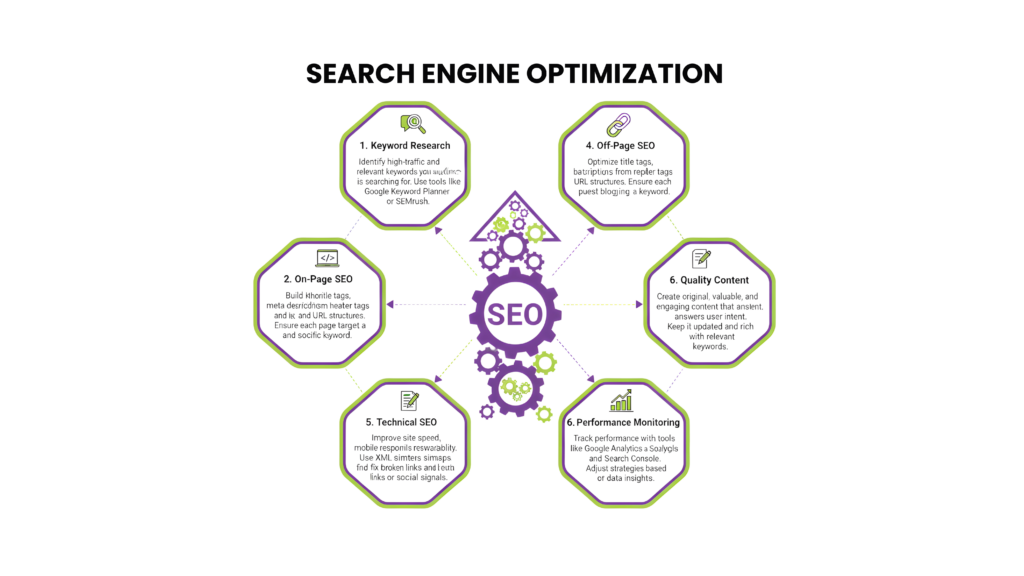

AI algorithms now identify patterns and automate tasks like keyword research and content optimization that once took hours. Structured data has evolved from an option to a requirement for AI optimization. It removes ambiguity and speeds up extraction.

You’ll find the technical SEO basics needed to optimize your website for AI-driven search engines in this piece. The guide covers everything from schema implementation to performance optimization. These useful strategies will help your content stay visible and competitive as search continues to evolve.

What Makes Technical SEO AI-Ready?

Technical SEO for AI search engines needs a radical alteration in the way you optimize websites. AI engines these days do more than match keywords—they understand meaning, context, and structure. Research shows AI agents make up about 28% of Googlebot’s traffic volume, and this number will keep growing. You just need new technical approaches to keep your content visible and authoritative.

Difference Between Traditional SEO and AI SEO

Traditional SEO and AI SEO both aim to help people find content, but they work very differently. Traditional SEO looks at keywords, backlinks, and metadata, while AI SEO focuses on context, structure, and clarity. Traditional SEO asks, “How do I rank?” but AI SEO asks, “How can AI understand and cite my content accurately?”

The way we measure success has changed a lot between these approaches. Traditional SEO looks at traffic, rankings, and click-through rates. AI SEO tracks how often AI tools mention and show your content. Your content can build authority when AI tools like Perplexity or ChatGPT reference it often, even without direct links or clicks.

On top of that, it’s become more important to write content that AI systems can extract, summarize, and present accurately without losing context. The change from keyword matching to understanding meaning has made proper content structure vital for visibility.

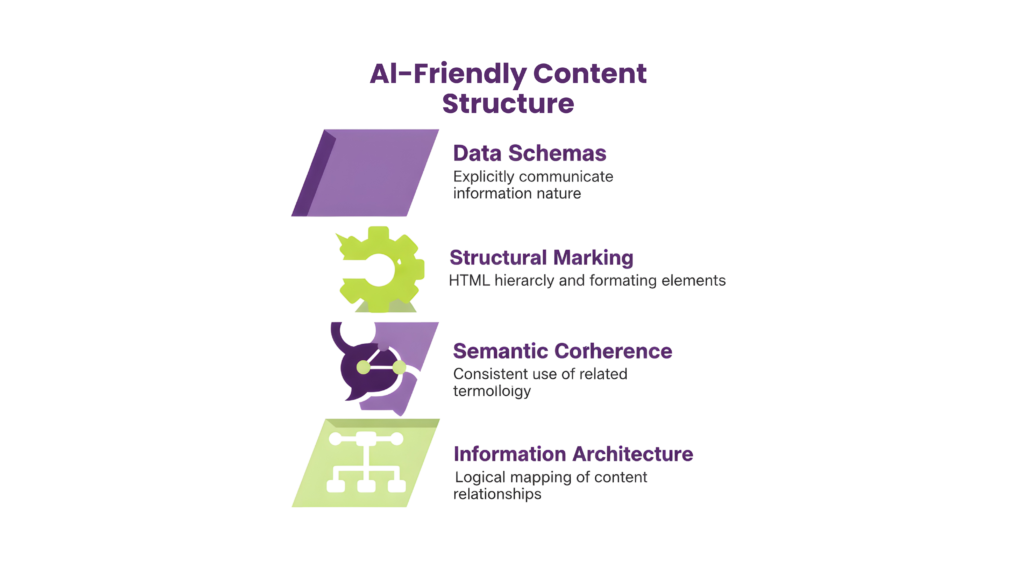

Why Structured Content Matters for AI

Structured content is a vital part of working with AI engines because they process information differently than traditional search crawlers. AI systems need extra structure to interpret your content correctly, unlike human readers, who naturally understand context. That’s why over 72% of websites on Google’s first page now use schema markup.

Well-laid-out content helps AI search engines in several ways:

- Makes information extraction precise and accurate

- Boosts chances of showing up in AI-generated answers

- Gives context that helps AI connect different concepts

- Shows credibility and organization that AI engines value

AI search engines like content with clear headings that follow logical outlines, short paragraphs, bullet points, and plain language with direct answers. These elements help AI understand and process your information better, which affects how often your content shows up in AI-generated responses.

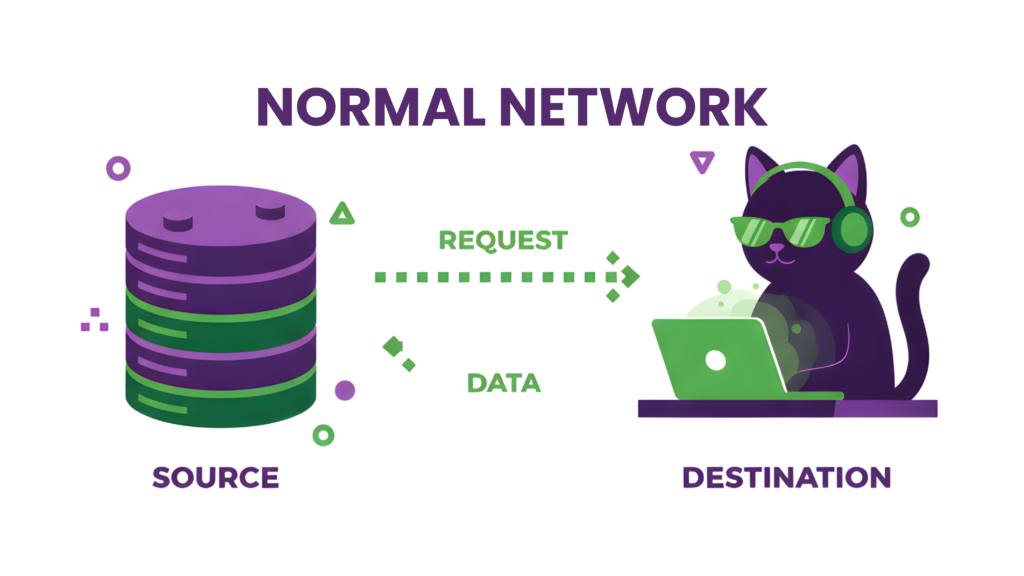

How AI Crawlers Interpret Your Site

AI crawlers look at websites in a completely different way than traditional search engine bots. Many AI crawlers can’t run JavaScript, unlike Googlebot, which processes JavaScript after its first visit. They only see the raw HTML your website serves and skip any content that JavaScript loads or changes.

AI crawlers don’t just scan your page. They take it in, break it into pieces, and study how words, sentences, and concepts connect using attention mechanisms. They don’t focus on meta tags or JSON-LD snippets to figure out your page’s topic. Instead, they look for clear meaning: Does your content make sense? Does it flow well? Does it answer questions directly?

AI crawlers assess:

- How you present information

- The way concepts build on each other (why headings matter)

- Format signals like bullet points, tables, and bold summaries

- Patterns that show what’s important

This explains why messy content might not show up in AI summaries, even with keywords and schema. But a clear, well-formatted post without schema might get quoted directly.

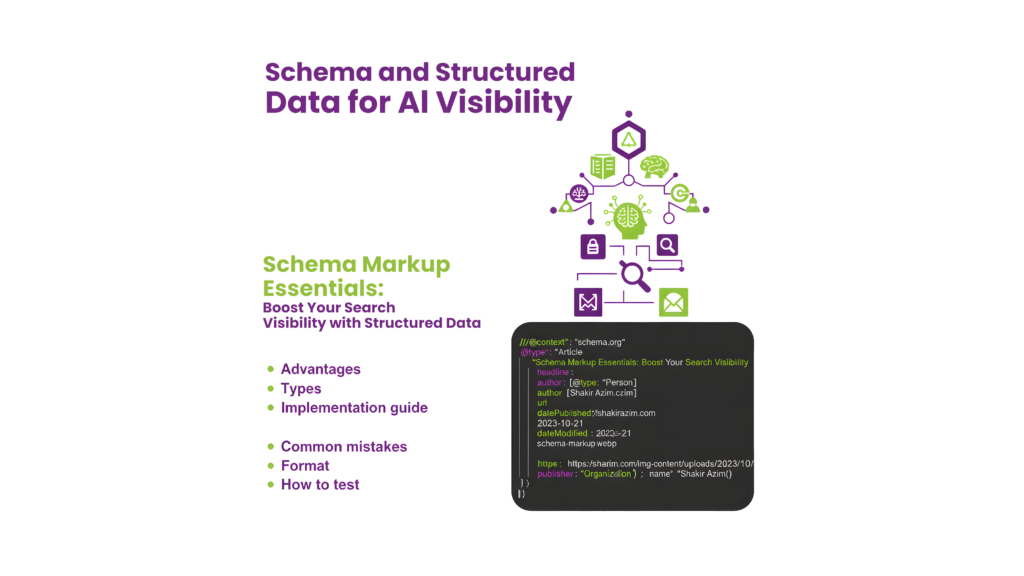

Schema and Structured Data for AI Visibility

Structured data has changed from an optional SEO improvement to a vital part of AI visibility. Google’s first page shows that 72.6% of pages use schema markup. This statistic proves its growing importance in technical SEO AI optimization. The right schema creates a machine-readable knowledge graph that helps AI engines understand your content better.

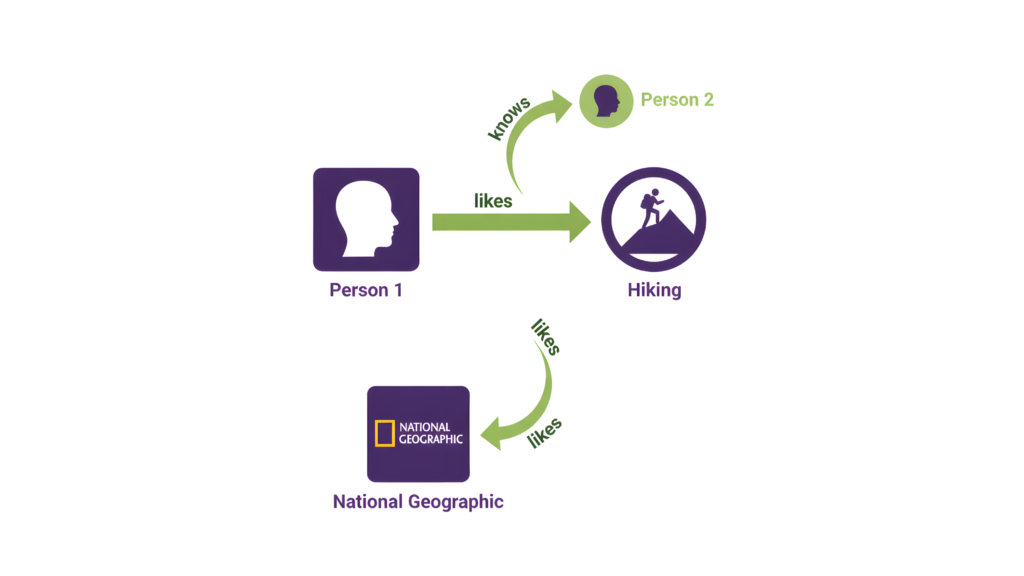

Entity-Based SEO with Schema.org Types

Entity-based SEO builds structured relationships between concepts instead of targeting keywords. Schema.org vocabulary lets you define your content’s meaning rather than just its contents. AI search engines process information through entities and their connections, making this approach perfect.

The right way to use entity-based SEO:

- Identify key entities on your pages (people, products, services, locations)

- Select appropriate Schema.org types (currently 817 types available)

- Define relationships between entities using properties like @id and sameAs

- Maintain consistent entity identification across your entire site

Schema markup builds a complete content knowledge graph at scale. This structured data layer connects your brand’s entities across your site and beyond.

Aligning with Knowledge Graph Entities

Your site’s entities need connections to established external knowledge sources to maximize AI visibility. AI systems can verify your content’s authenticity and relevance through this process.

The @id property helps link related entities across your site explicitly. To cite an instance, see how mentioning your CEO with similar @id references creates a unified knowledge graph that shows their relationship clearly.

The sameAs property links your entities to trusted external sources like Wikidata or Wikipedia. Your content gains credibility by lining up with Google’s Knowledge Graph. Here’s an example:

{

“@context”: “https://schema.org”,

“@type”: “Person”,

“name”: “John Doe”,

“sameAs”: [

“https://www.wikidata.org/wiki/Q12345”,

“https://en.wikipedia.org/wiki/John_Doe”

]

}

Schema for Articles, Products, and LocalBusiness

AI visibility needs specific schema implementations for different content types. Articles need a complete Article schema with required fields, plus educational level and audience targeting information. This approach improves chances of appearing in AI Overviews.

Products need nested schema types that describe your offerings fully:

- Core Product schema with detailed specifications

- Nested Offer schema containing pricing information

- Review and AggregateRating schemas to showcase customer feedback

Organizations with physical locations must use the LocalBusiness schema. Your homepage should start with the Organization or LocalBusiness schema as the central reference point. You should include:

- Business name, logo, and description

- Physical location details using PostalAddress

- Operating hours via openingHoursSpecification

- Social profiles using the sameAs property

- Department information for larger businesses

A newer study, published in, showed that pages with strong schema markup had higher citation rates in Google’s AI Overviews. Enterprise SEO services now prioritize structured data implementation for AI-ready websites.

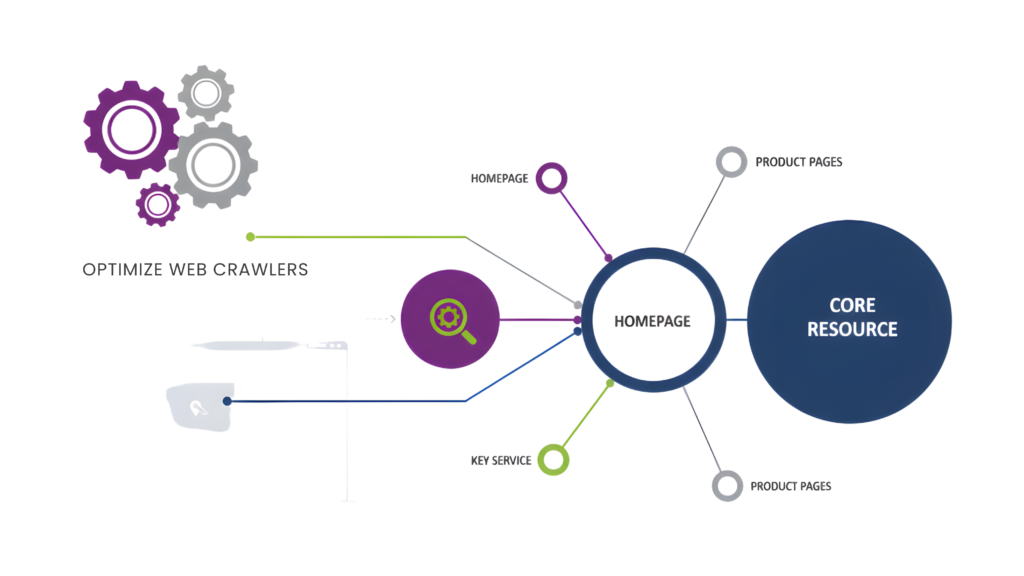

How to Boost Crawl Efficiency and Site Discoverability

AI crawlers work differently from traditional search engines, which means your site needs to be accessible to be visible. Your best content might stay hidden from AI search results if you don’t optimize your site’s findability.

Crawl Budget Optimization for Large Sites

Managing crawl budgets becomes crucial for websites with more than 10,000 pages. This ensures AI crawlers index your most important content first. Crawl budget is the number of pages search engines will crawl within a specific timeframe, usually 24 hours. This limit exists because “the web is a nearly infinite space, exceeding Google’s ability to explore and index every available URL”.

Here’s how to optimize your crawl budget:

- Fix all 404 errors and broken links that waste valuable crawl resources

- Keep clean, logical URL structures to make crawling more efficient

- Speed up your server, since the crawl rate depends on server performance

- Get rid of duplicate content and extra URL parameters that use up crawl resources

- You might want to pre-render JavaScript content so crawlers spend less time rendering

Every crawl request used on low-value or duplicate content means you’re missing chances to index your important pages. These optimizations can substantially improve your technical SEO AI performance for enterprise websites.

Using XML Sitemaps with Accurate Metadata

XML sitemaps act as roadmaps that point AI crawlers straight to your most valuable content. They help crawlers use their limited resources on pages that matter most to your business goals.

Your XML sitemaps should follow these rules:

- Keep individual sitemaps under 50,000 URLs or 50MB uncompressed

- Create separate sitemaps for different content types (products, blog posts, etc.)

- Only include canonical URLs you want indexed

- Use the lastmod attribute to show content freshness

- Submit your sitemap through Google Search Console to speed up discovery

Breaking sitemaps into logical sections helps search engines process your content more efficiently on large websites. In fact, well-managed sitemaps directly affect how crawlers use their resources, letting more of your crawl budget focus on fresh, high-priority pages.

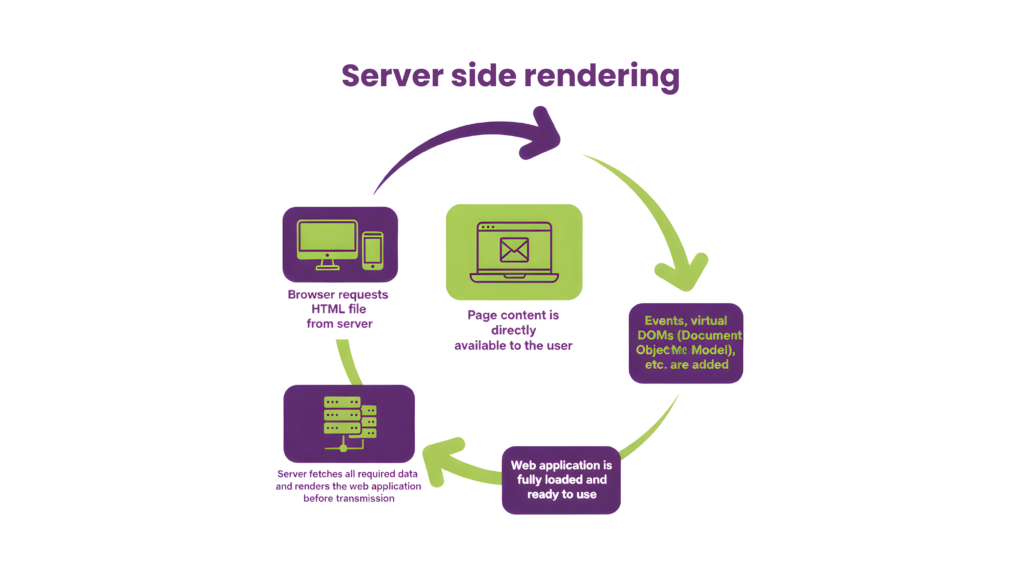

Handling JavaScript with Server-Side Rendering

Most AI crawlers can’t run JavaScript, unlike Googlebot. This means they can’t see client-side rendered content. Many modern websites rely heavily on JavaScript frameworks, which creates a big challenge.

Server-side rendering (SSR) fixes this by processing JavaScript on your server before sending fully-rendered HTML to crawlers. Here’s what you get:

- Content becomes available instantly without needing JavaScript execution

- Search engines index faster because they get complete HTML right away

- All crawlers, including AI bots, can access your content better

- Time to First Byte (TTFB) and other performance metrics improve

Setting up SSR might look technically complex at first, but the SEO benefits are worth the development effort. AI crawlers might only see blank or partially loaded pages without proper rendering, which drastically reduces your visibility in AI-driven search results.

A hybrid approach often works best. You can combine server-side rendering for critical content with client-side rendering for interactive elements. This balances crawler accessibility with user experience.

How To Optimize Performance for AI Search Engines

Speed affects how AI search engines view your site’s quality. A newer study shows that just a 100-millisecond delay can drop conversion rates by 7%. Your technical SEO success with AI depends on good performance.

Improving Core Web Vitals for AI Ranking

Core Web Vitals show how real users experience your site and shape AI engines’ assessment. Google says good Core Web Vitals scores lead to better Search results. You should focus on these three vital metrics:

- Largest Contentful Paint (LCP): Keep it under 2.5 seconds for a better user experience

- Interaction To Next Paint (INP): Response time should stay below 200 milliseconds

- Cumulative Layout Shift (CLS): Your score needs to be under 0.1 to avoid layout jumps

AI-powered performance tools check millions of data points right away and give you quick insights into these metrics. You can spot user experience problems before they hurt your rankings.

Reducing Latency with CDN and Fast Hosting

Content Delivery Networks (CDNs) make your site faster for AI crawlers. They store cached versions of your content on servers around the world. This cuts down the distance your data travels, which means better load times.

CDNs give you these key benefits:

- Distance reduction: Your content loads from the closest server

- Hardware optimization: SSDs open files 30% faster than regular HDDs

- File compression: Files become 50-70% smaller

Compressing Assets and Lazy Loading

Smart image compression helps pages load faster without losing quality. WebP format can shrink image files by 60%. SVG formats work great for logos and icons because they stay small.

Lazy loading waits to load resources until they’re needed. You just add the loading=”lazy” attribute to images and iframes:

<img src=”image.jpg” alt=”Product image” loading=”lazy”>

<iframe loading=”lazy” src=”video-player.html” title=”Demo video”></iframe>

This method speeds up the first page load by waiting to load off-screen images until users scroll near them. AI crawlers can work more efficiently as a result.

Semantic SEO and Internal Linking Strategies

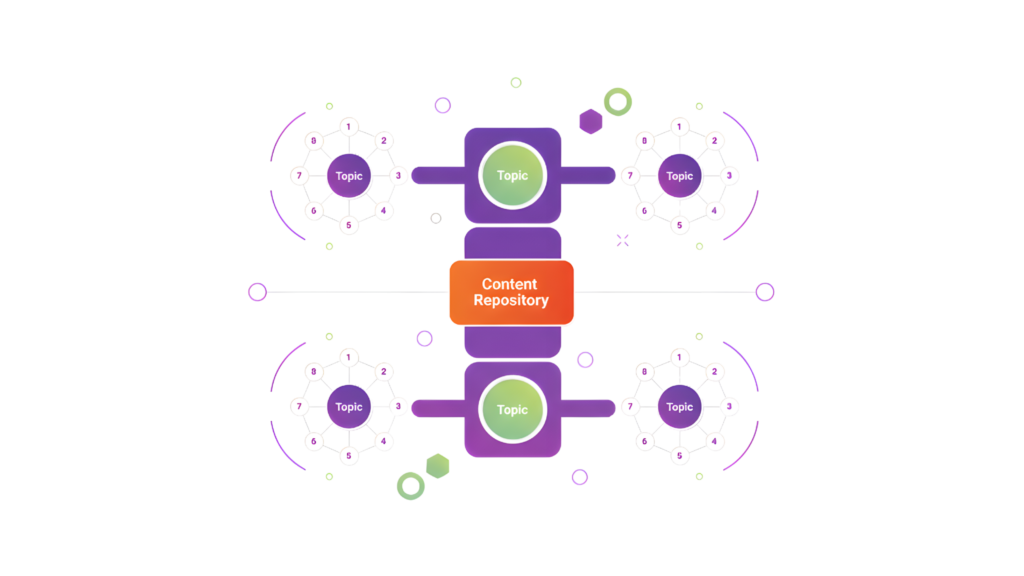

Strategic content organization makes the difference between scattered web pages and a cohesive, AI-friendly website. Search engines now focus more on topics than keywords, making topic clusters a vital part of semantic SEO.

Building Topic Clusters and Content Hubs

A topic cluster connects thematically related pages through a central pillar page with supporting cluster pages. Search engines recognize your pillar page’s authority on specific topics through this structure.

Here’s how you can create effective topic clusters:

- Map 5-10 core problems your audience faces

- Group these problems into broad topic areas

- Build each core topic with subtopics using keyword research

- Arrange your content to match these topics and subtopics

Your pillar page should give a broad overview of the main topic. The cluster pages should take a closer look at specific aspects. The pillar page needs to answer all potential reader questions while being broad enough to support 20-30 related posts.

Descriptive Anchor Text for Contextual Linking

Both users and search engines benefit from descriptive anchor text that shows what lies behind a link. Good links provide a clear “information scent”. AI crawlers use your anchor text to understand how pages connect.

Your anchor text should be:

- Descriptive of the destination page

- Concise yet informative

- Unique when linking to different pages

“Techniques for engaging content” works better than generic phrases like “click here” or “read more”.

Using NLP-Friendly Language in Content

Natural Language Processing (NLP) helps AI grasp your content’s meaning and context. These tips make your content NLP-friendly:

Content that AI understands better also reads better for humans. Keep related concepts close together in sentences to help AI connect ideas. Use clear, direct language and remove unnecessary words.

On top of that, weave related terms naturally throughout your content. Tools like Latent Semantic Analysis can spot connected keywords – like linking “balanced diet” with “healthy eating”. This approach boosts engagement and helps technical SEO AI systems understand your content better.

Conclusion

AI-driven search engines have reshaped the digital world, and technical SEO basics have changed with them. You’ve found how AI search engines work differently from traditional ones. Your content needs new approaches to stay visible and competitive.

Your success now depends on content that AI can easily understand and process. Your website should speak machine language through detailed schema markup while staying readable for humans. AI crawlers work differently from regular search engine bots, so crawl efficiency matters more than ever.

Speed and responsiveness have become crucial in this new era. AI engines prefer websites that load quickly and give users a great experience. Your visibility in AI-powered search results will improve when you work on Core Web Vitals, reduce latency, and optimize your assets.

Your site becomes more AI-friendly with semantic SEO and smart internal linking. AI systems can better direct through your content when you build topic clusters, use clear anchor text, and write in NLP-friendly language. This creates a knowledge graph that makes sense.

What you do with your technical foundation today will decide if your business runs on or falls behind in an AI-driven search world. These technical details can be complex, so working with SEO experts often helps you optimize faster.

Want to prepare your website for AI? Rankfast’s SEO services help you set up these technical must-haves. Their AI keyword research tool helps your content line up with new search patterns. Start making your technical SEO ready for AI search engines now to stay ahead of your competition tomorrow for enterprise SEO firms.

FAQs

Q1. How does AI-driven SEO differ from traditional SEO?

AI-driven SEO focuses on context, structure, and clarity, while traditional SEO revolves around keywords and backlinks. AI SEO aims to make content understandable and citable by AI systems, emphasizing semantic meaning and structured data.

Q2. What are the key components of technical SEO for AI search engines?

Essential components include structured content, schema markup, crawl efficiency optimization, performance improvements, and semantic SEO strategies. These elements help AI crawlers better understand, index, and recommend your content.

Q3. How important is structured data for AI visibility?

Structured data is crucial for AI visibility. It helps create a machine-readable knowledge graph, allowing AI engines to accurately interpret your content. Implementing proper schema markup can significantly improve your chances of appearing in AI-generated responses.

Q4. What are Core Web Vitals, and why are they important for AI ranking?

Core Web Vitals are metrics that measure real-world user experience, including Largest Contentful Paint, Interaction to Next Paint, and Cumulative Layout Shift. They directly influence how AI engines evaluate your site’s quality and user experience, affecting your rankings.

Q5. How can I optimize my website’s crawl efficiency for AI search engines?

To optimize crawl efficiency, focus on managing your crawl budget, using accurate XML sitemaps, implementing server-side rendering for JavaScript content, and maintaining a clean site structure. These practices ensure AI crawlers can efficiently discover and index your most important content.

Leave a Reply